Neural Networks and Decision Trees & Architectures for Big Scale 2D Imagery

The meeting “Neural Networks and Decision Trees & Architectures for Big Scale 2D Imagery” was organised by London Machine Learning Meetup and we were presented by two very interesting talks about neural networks and decision trees, and architectures related to 2D image processing.

The first talk about the Adaptive Neural Trees (ANTs) was presented by Ryutaro Tanno, a PhD researcher at UCL.

ANT is a model that incorporates representation learning into edges, routing functions and leaf nodes of a decision tree. The way ANTs are created is basically with combining the decision trees with a neural network, where every node in a decision tree is represented with a neural network instead of a linear model. Ryutaro showed how neural networks and decision trees can work together beneficially to overcome the problems of each individual technique. The main obstacles that the ANTs try to overcome are learning architecture from data instead of designing it by hand, light-weightedness, automatically learn features from data, etc.

There are several advantages of the ANTs compared to decision trees or neural networks, which were demonstrated on several experimental datasets:

- it can learn features from data, this important property of neural networks is preserved within ANTs,

- scalable and adaptive learning, a property taken from neural networks,

- adaptivity of the architecture based on data, which is a property inherited from decision trees,

- lightweight inference, activating only a small portion of the model, a property also taken from decision trees.

The second talk was presented by another UCL PhD researcher Zbigniew Wojna.

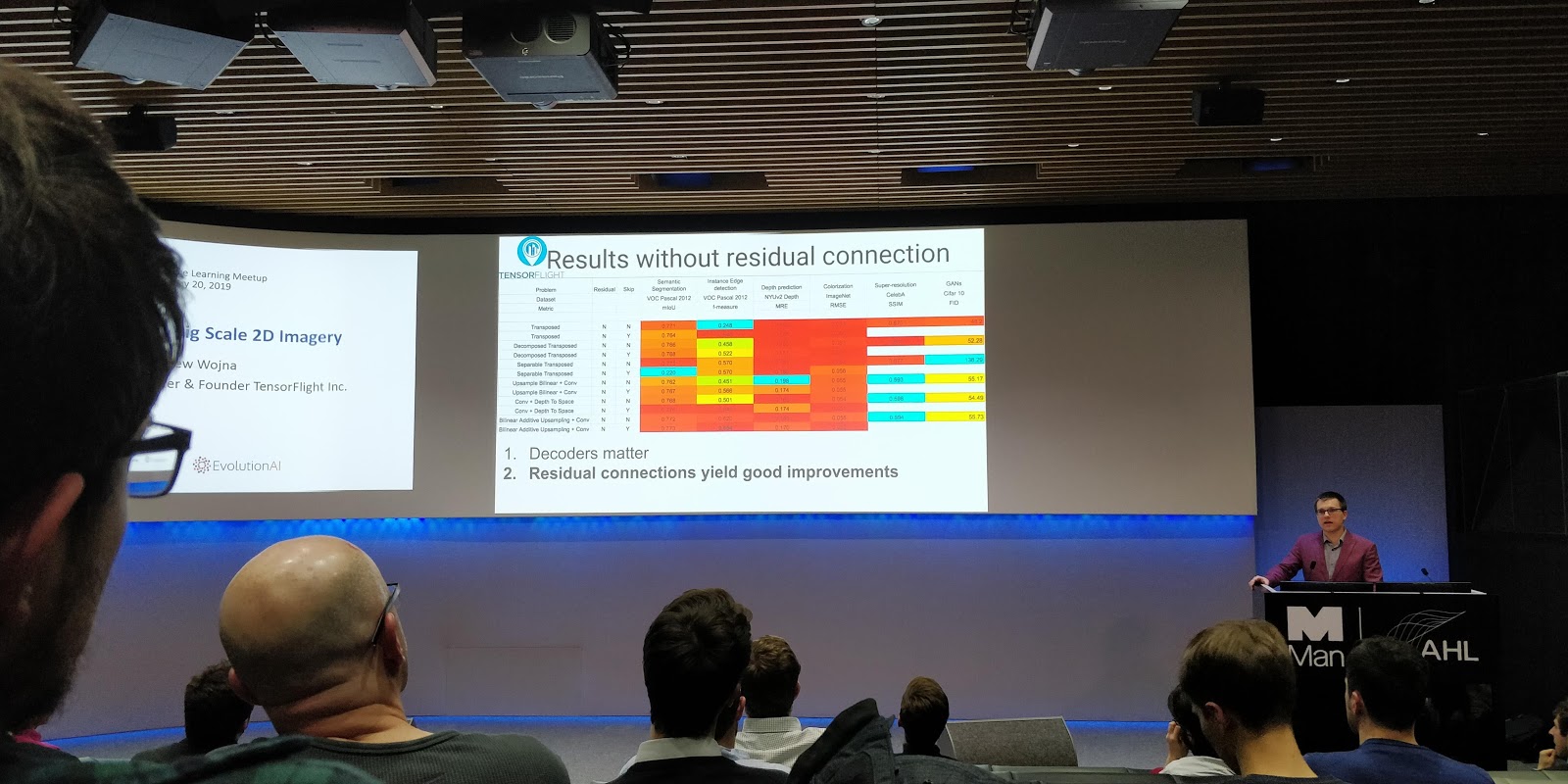

He proposed bilinear additive upsampling by summing up different channels during the upsampling process. Firstly, the process doubles the original image pixels and then it sums pixels from different channels. The approach is very useful for residual like connections, where traditional upsampling approaches produce noisy results, adding the additive upsampling make the results more consistent. The researchers compared six upsampling methods, transposed, decomposed transposed, convolutional and death to space, bilinear upsampling and convolution or separable convolutions, and finally bilinear additive upsampling with convolution.

The validation of the results using the proposed bilinear additive approach to upsampling of images have shown that the bilinear additive upsampling works very well especially with images with the residual connection. The results have shown very smooth images and much lower pixelation of upsampled images when comparing transposed, death to space and additive bilinear approach.